Adversarial text to continous image generation

Abstract

Implicit Neural Representations (INR) provide a natural way to parametrize images as a continuous signal, using an MLP that predicts the RGB color at an (x, y) image location. Recently, it has been shown that high-quality INR decoders can be designed and integrated with Generative Adversarial Networks (GANs) to facilitate unconditional continuous image generation that is no longer bound to a particular spatial resolution. In this paper, we introduce HyperCGAN, a conceptually simple approach for Adversarial Text to Continuous Image Generation based on HyperNetworks, which produces parameters for another network. HyperCGAN utilizes HyperNetworks to condition an INR-based GAN model on text. In this setting, the generator and the discriminator weights are controlled by their corresponding HyperNetworks, which modulate weight parameters using the provided text query. We propose an effective Word-level hyper-modulation Attention operator, termed WhAtt, which encourages grounding words to independent pixels at input (x, y) coordinates. To the best of our knowledge, our work is the first that explores Text to Continuous Image Generation (T2CI). We conduct comprehensive experiments on COCO 2562, CUB 2562, and ArtEmis 2562 benchmark, which we introduce in this paper. HyperCGAN improves the performance of text-controllable image generators over the baselines while significantly reducing the gap between text-to-continuous and text-to-discrete image synthesis. Additionally, we show that HyperCGAN, when conditioned on text, retains the desired properties of continuous generative models (e.g., extrapolation outside of image boundaries, accelerated inference of low-resolution images, out-of-the-box superresolution). Code and ArtEmis 2562 benchmark will be made publicly available.

Qualitative Results from a discrete version of HyperCGAN

Qualitative Examples on CUB with Extrapolated Region outside red rectangles

Qualitative Examples on WIKI with Extrapolated Region outside red rectangles

Qualitative Examples on COCO with Extrapolated Region outside red rectangles

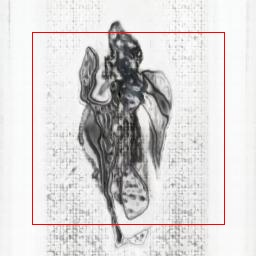

Failure Cases

HyperCGAN limitations is related to

- Left: The facial expression of people is not clear.

- Middle: Artifacts can be visible in shape of blobs.

- Right: The number of objects might not be represented accurately and generated as patterns related to the nature of the object.